Pyomo Documentation 6.8.0

Pyomo is a Python-based, open-source optimization modeling language with a diverse set of optimization capabilities.

Installation

Pyomo currently supports the following versions of Python:

CPython: 3.8, 3.9, 3.10, 3.11, 3.12

PyPy: 3

At the time of the first Pyomo release after the end-of-life of a minor Python version, Pyomo will remove testing for that Python version.

Using CONDA

We recommend installation with conda, which is included with the

Anaconda distribution of Python. You can install Pyomo in your system

Python installation by executing the following in a shell:

conda install -c conda-forge pyomo

Optimization solvers are not installed with Pyomo, but some open source

optimization solvers can be installed with conda as well:

conda install -c conda-forge ipopt glpk

Using PIP

The standard utility for installing Python packages is pip. You

can install Pyomo in your system Python installation by executing

the following in a shell:

pip install pyomo

Conditional Dependencies

Extensions to Pyomo, and many of the contributions in pyomo.contrib,

often have conditional dependencies on a variety of third-party Python

packages including but not limited to: matplotlib, networkx, numpy,

openpyxl, pandas, pint, pymysql, pyodbc, pyro4, scipy, sympy, and

xlrd.

A full list of conditional dependencies can be found in Pyomo’s

setup.py and displayed using:

python setup.py dependencies --extra optional

Pyomo extensions that require any of these packages will generate an error message for missing dependencies upon use.

When using pip, all conditional dependencies can be installed at once using the following command:

pip install 'pyomo[optional]'

When using conda, many of the conditional dependencies are included with the standard Anaconda installation.

You can check which Python packages you have installed using the command

conda list or pip list. Additional Python packages may be

installed as needed.

Installation with Cython

Users can opt to install Pyomo with cython initialized.

Note

This can only be done via pip or from source.

Via pip:

pip install pyomo --global-option="--with-cython"

From source (recommended for advanced users only):

git clone https://github.com/Pyomo/pyomo.git

cd pyomo

python setup.py install --with-cython

Citing Pyomo

Pyomo

Bynum, Michael L., Gabriel A. Hackebeil, William E. Hart, Carl D. Laird, Bethany L. Nicholson, John D. Siirola, Jean-Paul Watson, and David L. Woodruff. Pyomo - Optimization Modeling in Python, 3rd Edition. Springer, 2021.

Hart, William E., Jean-Paul Watson, and David L. Woodruff. “Pyomo: modeling and solving mathematical programs in Python.” Mathematical Programming Computation 3, no. 3 (2011): 219-260.

PySP

Watson, Jean-Paul, David L. Woodruff, and William E. Hart. “PySP: modeling and solving stochastic programs in Python.” Mathematical Programming Computation 4, no. 2 (2012): 109-149.

Pyomo Overview

Mathematical Modeling

This section provides an introduction to Pyomo: Python Optimization Modeling Objects. A more complete description is contained in the [PyomoBookIII] book. Pyomo supports the formulation and analysis of mathematical models for complex optimization applications. This capability is commonly associated with commercially available algebraic modeling languages (AMLs) such as [AMPL], [AIMMS], and [GAMS]. Pyomo’s modeling objects are embedded within Python, a full-featured, high-level programming language that contains a rich set of supporting libraries.

Modeling is a fundamental process in many aspects of scientific research, engineering and business. Modeling involves the formulation of a simplified representation of a system or real-world object. Thus, modeling tools like Pyomo can be used in a variety of ways:

Explain phenomena that arise in a system,

Make predictions about future states of a system,

Assess key factors that influence phenomena in a system,

Identify extreme states in a system, that might represent worst-case scenarios or minimal cost plans, and

Analyze trade-offs to support human decision makers.

Mathematical models represent system knowledge with a formalized language. The following mathematical concepts are central to modern modeling activities:

Variables

Variables represent unknown or changing parts of a model (e.g., whether or not to make a decision, or the characteristic of a system outcome). The values taken by the variables are often referred to as a solution and are usually an output of the optimization process.

Parameters

Parameters represents the data that must be supplied to perform the optimization. In fact, in some settings the word data is used in place of the word parameters.

Relations

These are equations, inequalities or other mathematical relationships that define how different parts of a model are connected to each other.

Goals

These are functions that reflect goals and objectives for the system being modeled.

The widespread availability of computing resources has made the numerical analysis of mathematical models a commonplace activity. Without a modeling language, the process of setting up input files, executing a solver and extracting the final results from the solver output is tedious and error-prone. This difficulty is compounded in complex, large-scale real-world applications which are difficult to debug when errors occur. Additionally, there are many different formats used by optimization software packages, and few formats are recognized by many optimizers. Thus the application of multiple optimization solvers to analyze a model introduces additional complexities.

Pyomo is an AML that extends Python to include objects for mathematical modeling. [PyomoBookI], [PyomoBookII], [PyomoBookIII], and [PyomoJournal] compare Pyomo with other AMLs. Although many good AMLs have been developed for optimization models, the following are motivating factors for the development of Pyomo:

Open Source

Pyomo is developed within Pyomo’s open source project to promote transparency of the modeling framework and encourage community development of Pyomo capabilities.

Customizable Capability

Pyomo supports a customizable capability through the extensive use of plug-ins to modularize software components.

Solver Integration

Pyomo models can be optimized with solvers that are written either in Python or in compiled, low-level languages.

Programming Language

Pyomo leverages a high-level programming language, which has several advantages over custom AMLs: a very robust language, extensive documentation, a rich set of standard libraries, support for modern programming features like classes and functions, and portability to many platforms.

Overview of Modeling Components and Processes

Pyomo supports an object-oriented design for the definition of optimization models. The basic steps of a simple modeling process are:

Create model and declare components

Instantiate the model

Apply solver

Interrogate solver results

In practice, these steps may be applied repeatedly with different data or with different constraints applied to the model. However, we focus on this simple modeling process to illustrate different strategies for modeling with Pyomo.

A Pyomo model consists of a collection of modeling components that define different aspects of the model. Pyomo includes the modeling components that are commonly supported by modern AMLs: index sets, symbolic parameters, decision variables, objectives, and constraints. These modeling components are defined in Pyomo through the following Python classes:

Set

set data that is used to define a model instance

Param

parameter data that is used to define a model instance

Var

decision variables in a model

Objective

expressions that are minimized or maximized in a model

Constraint

constraint expressions that impose restrictions on variable values in a model

Abstract Versus Concrete Models

A mathematical model can be defined using symbols that represent data values. For example, the following equations represent a linear program (LP) to find optimal values for the vector \(x\) with parameters \(n\) and \(b\), and parameter vectors \(a\) and \(c\):

Note

As a convenience, we use the symbol \(\forall\) to mean “for all” or “for each.”

We call this an abstract or symbolic mathematical model since it

relies on unspecified parameter values. Data values can be used to

specify a model instance. The AbstractModel class provides a

context for defining and initializing abstract optimization models in

Pyomo when the data values will be supplied at the time a solution is to

be obtained.

In many contexts, a mathematical model can and should be directly defined with the data values supplied at the time of the model definition. We call these concrete mathematical models. For example, the following LP model is a concrete instance of the previous abstract model:

The ConcreteModel class is used to define concrete optimization

models in Pyomo.

Note

Python programmers will probably prefer to write concrete models, while users of some other algebraic modeling languages may tend to prefer to write abstract models. The choice is largely a matter of taste; some applications may be a little more straightforward using one or the other.

Simple Models

A Simple Concrete Pyomo Model

It is possible to get the same flexible behavior from models declared to be abstract and models declared to be concrete in Pyomo; however, we will focus on a straightforward concrete example here where the data is hard-wired into the model file. Python programmers will quickly realize that the data could have come from other sources.

Given the following model from the previous section:

This can be implemented as a concrete model as follows:

import pyomo.environ as pyo

model = pyo.ConcreteModel()

model.x = pyo.Var([1,2], domain=pyo.NonNegativeReals)

model.OBJ = pyo.Objective(expr = 2*model.x[1] + 3*model.x[2])

model.Constraint1 = pyo.Constraint(expr = 3*model.x[1] + 4*model.x[2] >= 1)

Although rule functions can also be used to specify constraints and

objectives, in this example we use the expr option that is available

only in concrete models. This option gives a direct specification of the

expression.

A Simple Abstract Pyomo Model

We repeat the abstract model from the previous section:

One way to implement this in Pyomo is as shown as follows:

import pyomo.environ as pyo

model = pyo.AbstractModel()

model.m = pyo.Param(within=pyo.NonNegativeIntegers)

model.n = pyo.Param(within=pyo.NonNegativeIntegers)

model.I = pyo.RangeSet(1, model.m)

model.J = pyo.RangeSet(1, model.n)

model.a = pyo.Param(model.I, model.J)

model.b = pyo.Param(model.I)

model.c = pyo.Param(model.J)

# the next line declares a variable indexed by the set J

model.x = pyo.Var(model.J, domain=pyo.NonNegativeReals)

def obj_expression(m):

return pyo.summation(m.c, m.x)

model.OBJ = pyo.Objective(rule=obj_expression)

def ax_constraint_rule(m, i):

# return the expression for the constraint for i

return sum(m.a[i,j] * m.x[j] for j in m.J) >= m.b[i]

# the next line creates one constraint for each member of the set model.I

model.AxbConstraint = pyo.Constraint(model.I, rule=ax_constraint_rule)

Note

Python is interpreted one line at a time. A line continuation

character, \ (backslash), is used for Python statements that need to span

multiple lines. In Python, indentation has meaning and must be

consistent. For example, lines inside a function definition must be

indented and the end of the indentation is used by Python to signal

the end of the definition.

We will now examine the lines in this example. The first import line is required in every Pyomo model. Its purpose is to make the symbols used by Pyomo known to Python.

import pyomo.environ as pyo

The declaration of a model is also required. The use of the name model

is not required. Almost any name could be used, but we will use the name

model in most of our examples. In this example, we are declaring

that it will be an abstract model.

model = pyo.AbstractModel()

We declare the parameters \(m\) and \(n\) using the Pyomo

Param component. This component can take a variety of arguments; this

example illustrates use of the within option that is used by Pyomo

to validate the data value that is assigned to the parameter. If this

option were not given, then Pyomo would not object to any type of data

being assigned to these parameters. As it is, assignment of a value that

is not a non-negative integer will result in an error.

model.m = pyo.Param(within=pyo.NonNegativeIntegers)

model.n = pyo.Param(within=pyo.NonNegativeIntegers)

Although not required, it is convenient to define index sets. In this

example we use the RangeSet component to declare that the sets will

be a sequence of integers starting at 1 and ending at a value specified

by the the parameters model.m and model.n.

model.I = pyo.RangeSet(1, model.m)

model.J = pyo.RangeSet(1, model.n)

The coefficient and right-hand-side data are defined as indexed

parameters. When sets are given as arguments to the Param component,

they indicate that the set will index the parameter.

model.a = pyo.Param(model.I, model.J)

model.b = pyo.Param(model.I)

model.c = pyo.Param(model.J)

The next line that is interpreted by Python as part of the model

declares the variable \(x\). The first argument to the Var

component is a set, so it is defined as an index set for the variable. In

this case the variable has only one index set, but multiple sets could

be used as was the case for the declaration of the parameter

model.a. The second argument specifies a domain for the

variable. This information is part of the model and will passed to the

solver when data is provided and the model is solved. Specification of

the NonNegativeReals domain implements the requirement that the

variables be greater than or equal to zero.

# the next line declares a variable indexed by the set J

model.x = pyo.Var(model.J, domain=pyo.NonNegativeReals)

Note

In Python, and therefore in Pyomo, any text after pound sign is considered to be a comment.

In abstract models, Pyomo expressions are usually provided to objective

and constraint declarations via a function defined with a

Python def statement. The def statement establishes a name for a

function along with its arguments. When Pyomo uses a function to get

objective or constraint expressions, it always passes in the

model (i.e., itself) as the the first argument so the model is always

the first formal argument when declaring such functions in Pyomo.

Additional arguments, if needed, follow. Since summation is an extremely

common part of optimization models, Pyomo provides a flexible function

to accommodate it. When given two arguments, the summation() function

returns an expression for the sum of the product of the two arguments

over their indexes. This only works, of course, if the two arguments

have the same indexes. If it is given only one argument it returns an

expression for the sum over all indexes of that argument. So in this

example, when summation() is passed the arguments m.c, m.x

it returns an internal representation of the expression

\(\sum_{j=1}^{n}c_{j} x_{j}\).

def obj_expression(m):

return pyo.summation(m.c, m.x)

To declare an objective function, the Pyomo component called

Objective is used. The rule argument gives the name of a

function that returns the objective expression. The default sense is

minimization. For maximization, the sense=pyo.maximize argument must be

used. The name that is declared, which is OBJ in this case, appears

in some reports and can be almost any name.

model.OBJ = pyo.Objective(rule=obj_expression)

Declaration of constraints is similar. A function is declared to generate

the constraint expression. In this case, there can be multiple

constraints of the same form because we index the constraints by

\(i\) in the expression \(\sum_{j=1}^n a_{ij} x_j \geq b_i

\;\;\forall i = 1 \ldots m\), which states that we need a constraint for

each value of \(i\) from one to \(m\). In order to parametrize

the expression by \(i\) we include it as a formal parameter to the

function that declares the constraint expression. Technically, we could

have used anything for this argument, but that might be confusing. Using

an i for an \(i\) seems sensible in this situation.

def ax_constraint_rule(m, i):

# return the expression for the constraint for i

return sum(m.a[i,j] * m.x[j] for j in m.J) >= m.b[i]

Note

In Python, indexes are in square brackets and function arguments are in parentheses.

In order to declare constraints that use this expression, we use the

Pyomo Constraint component that takes a variety of arguments. In this

case, our model specifies that we can have more than one constraint of

the same form and we have created a set, model.I, over which these

constraints can be indexed so that is the first argument to the

constraint declaration. The next argument gives the rule that

will be used to generate expressions for the constraints. Taken as a

whole, this constraint declaration says that a list of constraints

indexed by the set model.I will be created and for each member of

model.I, the function ax_constraint_rule will be called and it

will be passed the model object as well as the member of model.I

# the next line creates one constraint for each member of the set model.I

model.AxbConstraint = pyo.Constraint(model.I, rule=ax_constraint_rule)

In the object oriented view of all of this, we would say that model

object is a class instance of the AbstractModel class, and

model.J is a Set object that is contained by this model. Many

modeling components in Pyomo can be optionally specified as indexed

components: collections of components that are referenced using one or

more values. In this example, the parameter model.c is indexed with

set model.J.

In order to use this model, data must be given for the values of the

parameters. Here is one file that provides data (in AMPL “.dat” format).

# one way to input the data in AMPL format

# for indexed parameters, the indexes are given before the value

param m := 1 ;

param n := 2 ;

param a :=

1 1 3

1 2 4

;

param c:=

1 2

2 3

;

param b := 1 1 ;

There are multiple formats that can be used to provide data to a Pyomo model, but the AMPL format works well for our purposes because it contains the names of the data elements together with the data. In AMPL data files, text after a pound sign is treated as a comment. Lines generally do not matter, but statements must be terminated with a semi-colon.

For this particular data file, there is one constraint, so the value of

model.m will be one and there are two variables (i.e., the vector

model.x is two elements long) so the value of model.n will be

two. These two assignments are accomplished with standard

assignments. Notice that in AMPL format input, the name of the model is

omitted.

param m := 1 ;

param n := 2 ;

There is only one constraint, so only two values are needed for

model.a. When assigning values to arrays and vectors in AMPL format,

one way to do it is to give the index(es) and the the value. The line 1

2 4 causes model.a[1,2] to get the value

4. Since model.c has only one index, only one index value is needed

so, for example, the line 1 2 causes model.c[1] to get the

value 2. Line breaks generally do not matter in AMPL format data files,

so the assignment of the value for the single index of model.b is

given on one line since that is easy to read.

param a :=

1 1 3

1 2 4

;

param c:=

1 2

2 3

;

param b := 1 1 ;

Symbolic Index Sets

When working with Pyomo (or any other AML), it is convenient to write abstract models in a somewhat more abstract way by using index sets that contain strings rather than index sets that are implied by \(1,\ldots,m\) or the summation from 1 to \(n\). When this is done, the size of the set is implied by the input, rather than specified directly. Furthermore, the index entries may have no real order. Often, a mixture of integers and indexes and strings as indexes is needed in the same model. To start with an illustration of general indexes, consider a slightly different Pyomo implementation of the model we just presented.

# ___________________________________________________________________________

#

# Pyomo: Python Optimization Modeling Objects

# Copyright (c) 2008-2024

# National Technology and Engineering Solutions of Sandia, LLC

# Under the terms of Contract DE-NA0003525 with National Technology and

# Engineering Solutions of Sandia, LLC, the U.S. Government retains certain

# rights in this software.

# This software is distributed under the 3-clause BSD License.

# ___________________________________________________________________________

# abstract2.py

from pyomo.environ import *

model = AbstractModel()

model.I = Set()

model.J = Set()

model.a = Param(model.I, model.J)

model.b = Param(model.I)

model.c = Param(model.J)

# the next line declares a variable indexed by the set J

model.x = Var(model.J, domain=NonNegativeReals)

def obj_expression(model):

return summation(model.c, model.x)

model.OBJ = Objective(rule=obj_expression)

def ax_constraint_rule(model, i):

# return the expression for the constraint for i

return sum(model.a[i, j] * model.x[j] for j in model.J) >= model.b[i]

# the next line creates one constraint for each member of the set model.I

model.AxbConstraint = Constraint(model.I, rule=ax_constraint_rule)

To get the same instantiated model, the following data file can be used.

# abstract2a.dat AMPL format

set I := 1 ;

set J := 1 2 ;

param a :=

1 1 3

1 2 4

;

param c:=

1 2

2 3

;

param b := 1 1 ;

However, this model can also be fed different data for problems of the same general form using meaningful indexes.

# abstract2.dat AMPL data format

set I := TV Film ;

set J := Graham John Carol ;

param a :=

TV Graham 3

TV John 4.4

TV Carol 4.9

Film Graham 1

Film John 2.4

Film Carol 1.1

;

param c := [*]

Graham 2.2

John 3.1416

Carol 3

;

param b := TV 1 Film 1 ;

Solving the Simple Examples

Pyomo supports modeling and scripting but does not install a solver

automatically. In order to solve a model, there must be a solver

installed on the computer to be used. If there is a solver, then the

pyomo command can be used to solve a problem instance.

Suppose that the solver named glpk (also known as glpsol) is installed

on the computer. Suppose further that an abstract model is in the file

named abstract1.py and a data file for it is in the file named

abstract1.dat. From the command prompt, with both files in the

current directory, a solution can be obtained with the command:

pyomo solve abstract1.py abstract1.dat --solver=glpk

Since glpk is the default solver, there really is no need specify it so

the --solver option can be dropped.

Note

There are two dashes before the command line option names such as

solver.

To continue the example, if CPLEX is installed then it can be listed as the solver. The command to solve with CPLEX is

pyomo solve abstract1.py abstract1.dat --solver=cplex

This yields the following output on the screen:

[ 0.00] Setting up Pyomo environment

[ 0.00] Applying Pyomo preprocessing actions

[ 0.07] Creating model

[ 0.15] Applying solver

[ 0.37] Processing results

Number of solutions: 1

Solution Information

Gap: 0.0

Status: optimal

Function Value: 0.666666666667

Solver results file: results.json

[ 0.39] Applying Pyomo postprocessing actions

[ 0.39] Pyomo Finished

The numbers in square brackets indicate how much time was required for

each step. Results are written to the file named results.json, which

has a special structure that makes it useful for post-processing. To see

a summary of results written to the screen, use the --summary

option:

pyomo solve abstract1.py abstract1.dat --solver=cplex --summary

To see a list of Pyomo command line options, use:

pyomo solve --help

Note

There are two dashes before help.

For a concrete model, no data file is specified on the Pyomo command line.

Pyomo Modeling Components

Sets

Declaration

Sets can be declared using instances of the Set and RangeSet

classes or by

assigning set expressions. The simplest set declaration creates a set

and postpones creation of its members:

model.A = pyo.Set()

The Set class takes optional arguments such as:

dimen= Dimension of the members of the setdoc= String describing the setfilter= A Boolean function used during construction to indicate if a potential new member should be assigned to the setinitialize= An iterable containing the initial members of the Set, or function that returns an iterable of the initial members the set.ordered= A Boolean indicator that the set is ordered; the default isTruevalidate= A Boolean function that validates new member datawithin= Set used for validation; it is a super-set of the set being declared.

In general, Pyomo attempts to infer the “dimensionality” of Set

components (that is, the number of apparent indices) when they are

constructed. However, there are situations where Pyomo either cannot

detect a dimensionality (e.g., a Set that was not initialized with any

members), or you the user may want to assert the dimensionality of the

set. This can be accomplished through the dimen keyword. For

example, to create a set whose members will be tuples with two items, one

could write:

model.B = pyo.Set(dimen=2)

To create a set of all the numbers in set model.A doubled, one could

use

def DoubleA_init(model):

return (i*2 for i in model.A)

model.C = pyo.Set(initialize=DoubleA_init)

As an aside we note that as always in Python, there are lot of ways to

accomplish the same thing. Also, note that this will generate an error

if model.A contains elements for which multiplication times two is

not defined.

The initialize option can accept any Python iterable, including a

set, list, or tuple. This data may be returned from a

function or specified directly as in

model.D = pyo.Set(initialize=['red', 'green', 'blue'])

The initialize option can also specify either a generator or a

function to specify the Set members. In the case of a generator, all

data yielded by the generator will become the initial set members:

def X_init(m):

for i in range(10):

yield 2*i+1

model.X = pyo.Set(initialize=X_init)

For initialization functions, Pyomo supports two signatures. In the

first, the function returns an iterable (set, list, or

tuple) containing the data with which to initialize the Set:

def Y_init(m):

return [2*i+1 for i in range(10)]

model.Y = pyo.Set(initialize=Y_init)

In the second signature, the function is called for each element,

passing the element number in as an extra argument. This is repeated

until the function returns the special value Set.End:

def Z_init(model, i):

if i > 10:

return pyo.Set.End

return 2*i+1

model.Z = pyo.Set(initialize=Z_init)

Note that the element number starts with 1 and not 0:

>>> model.X.pprint()

X : Size=1, Index=None, Ordered=Insertion

Key : Dimen : Domain : Size : Members

None : 1 : Any : 10 : {1, 3, 5, 7, 9, 11, 13, 15, 17, 19}

>>> model.Y.pprint()

Y : Size=1, Index=None, Ordered=Insertion

Key : Dimen : Domain : Size : Members

None : 1 : Any : 10 : {1, 3, 5, 7, 9, 11, 13, 15, 17, 19}

>>> model.Z.pprint()

Z : Size=1, Index=None, Ordered=Insertion

Key : Dimen : Domain : Size : Members

None : 1 : Any : 10 : {3, 5, 7, 9, 11, 13, 15, 17, 19, 21}

Additional information about iterators for set initialization is in the [PyomoBookIII] book.

Note

For Abstract models, data specified in an input file or through the

data argument to AbstractModel.create_instance() will

override the data

specified by the initialize options.

If sets are given as arguments to Set without keywords, they are

interpreted as indexes for an array of sets. For example, to create an

array of sets that is indexed by the members of the set model.A, use:

model.E = pyo.Set(model.A)

Arguments can be combined. For example, to create an array of sets,

indexed by set model.A where each set contains three dimensional

members, use:

model.F = pyo.Set(model.A, dimen=3)

The initialize option can be used to create a set that contains a

sequence of numbers, but the RangeSet class provides a concise

mechanism for simple sequences. This class takes as its arguments a

start value, a final value, and a step size. If the RangeSet has

only a single argument, then that value defines the final value in the

sequence; the first value and step size default to one. If two values

given, they are the first and last value in the sequence and the step

size defaults to one. For example, the following declaration creates a

set with the numbers 1.5, 5 and 8.5:

model.G = pyo.RangeSet(1.5, 10, 3.5)

Operations

Sets may also be created by storing the result of set operations using other Pyomo sets. Pyomo supports set operations including union, intersection, difference, and symmetric difference:

model.I = model.A | model.D # union

model.J = model.A & model.D # intersection

model.K = model.A - model.D # difference

model.L = model.A ^ model.D # exclusive-or

For example, the cross-product operator is the asterisk (*). To define

a new set M that is the cross product of sets B and C, one

could use

model.M = model.B * model.C

This creates a virtual set that holds references to the original sets,

so any updates to the original sets (B and C) will be reflected

in the new set (M). In contrast, you can also create a concrete

set, which directly stores the values of the cross product at the time

of creation and will not reflect subsequent changes in the original

sets with:

model.M_concrete = pyo.Set(initialize=model.B * model.C)

Finally, you can indicate that the members of a set are restricted to be in the

cross product of two other sets, one can use the within keyword:

model.N = pyo.Set(within=model.B * model.C)

Predefined Virtual Sets

For use in specifying domains for sets, parameters and variables, Pyomo provides the following pre-defined virtual sets:

Any= all possible valuesReals= floating point valuesPositiveReals= strictly positive floating point valuesNonPositiveReals= non-positive floating point valuesNegativeReals= strictly negative floating point valuesNonNegativeReals= non-negative floating point valuesPercentFraction= floating point values in the interval [0,1]UnitInterval= alias for PercentFractionIntegers= integer valuesPositiveIntegers= positive integer valuesNonPositiveIntegers= non-positive integer valuesNegativeIntegers= negative integer valuesNonNegativeIntegers= non-negative integer valuesBoolean= Boolean values, which can be represented as False/True, 0/1, ’False’/’True’ and ’F’/’T’Binary= the integers {0, 1}

For example, if the set model.O is declared to be within the virtual

set NegativeIntegers then an attempt to add anything other than a

negative integer will result in an error. Here is the declaration:

model.O = pyo.Set(within=pyo.NegativeIntegers)

Sparse Index Sets

Sets provide indexes for parameters, variables and other sets. Index set issues are important for modelers in part because of efficiency considerations, but primarily because the right choice of index sets can result in very natural formulations that are conducive to understanding and maintenance. Pyomo leverages Python to provide a rich collection of options for index set creation and use.

The choice of how to represent indexes often depends on the application and the nature of the instance data that are expected. To illustrate some of the options and issues, we will consider problems involving networks. In many network applications, it is useful to declare a set of nodes, such as

model.Nodes = pyo.Set()

and then a set of arcs can be created with reference to the nodes.

Consider the following simple version of minimum cost flow problem:

where

Set: Nodes \(\equiv \mathcal{N}\)

Set: Arcs \(\equiv \mathcal{A} \subseteq \mathcal{N} \times \mathcal{N}\)

Var: Flow on arc \((i,j)\) \(\equiv x_{i,j},\; (i,j) \in \mathcal{A}\)

Param: Flow Cost on arc \((i,j)\) \(\equiv c_{i,j},\; (i,j) \in \mathcal{A}\)

Param: Demand at node latexmath:i \(\equiv D_{i},\; i \in \mathcal{N}\)

Param: Supply at node latexmath:i \(\equiv S_{i},\; i \in \mathcal{N}\)

In the simplest case, the arcs can just be the cross product of the nodes, which is accomplished by the definition

model.Arcs = model.Nodes*model.Nodes

that creates a set with two dimensional members. For applications where all nodes are always connected to all other nodes this may suffice. However, issues can arise when the network is not fully dense. For example, the burden of avoiding flow on arcs that do not exist falls on the data file where high-enough costs must be provided for those arcs. Such a scheme is not very elegant or robust.

For many network flow applications, it might be better to declare the arcs using

model.Arcs = pyo.Set(dimen=2)

or

model.Arcs = pyo.Set(within=model.Nodes*model.Nodes)

where the difference is that the first version will provide error

checking as data is assigned to the set elements. This would enable

specification of a sparse network in a natural way. But this results in

a need to change the FlowBalance constraint because as it was

written in the simple example, it sums over the entire set of nodes for

each node. One way to remedy this is to sum only over the members of the

set model.arcs as in

def FlowBalance_rule(m, node):

return m.Supply[node] \

+ sum(m.Flow[i, node] for i in m.Nodes if (i,node) in m.Arcs) \

- m.Demand[node] \

- sum(m.Flow[node, j] for j in m.Nodes if (j,node) in m.Arcs) \

== 0

This will be OK unless the number of nodes becomes very large for a sparse network, then the time to generate this constraint might become an issue (admittely, only for very large networks, but such networks do exist).

Another method, which comes in handy in many network applications, is to

have a set for each node that contain the nodes at the other end of arcs

going to the node at hand and another set giving the nodes on out-going

arcs. If these sets are called model.NodesIn and model.NodesOut

respectively, then the flow balance rule can be re-written as

def FlowBalance_rule(m, node):

return m.Supply[node] \

+ sum(m.Flow[i, node] for i in m.NodesIn[node]) \

- m.Demand[node] \

- sum(m.Flow[node, j] for j in m.NodesOut[node]) \

== 0

The data for NodesIn and NodesOut could be added to the input

file, and this may be the most efficient option.

For all but the largest networks, rather than reading Arcs,

NodesIn and NodesOut from a data file, it might be more elegant

to read only Arcs from a data file and declare model.NodesIn

with an initialize option specifying the creation as follows:

def NodesIn_init(m, node):

for i, j in m.Arcs:

if j == node:

yield i

model.NodesIn = pyo.Set(model.Nodes, initialize=NodesIn_init)

with a similar definition for model.NodesOut. This code creates a

list of sets for NodesIn, one set of nodes for each node. The full

model is:

import pyomo.environ as pyo

model = pyo.AbstractModel()

model.Nodes = pyo.Set()

model.Arcs = pyo.Set(dimen=2)

def NodesOut_init(m, node):

for i, j in m.Arcs:

if i == node:

yield j

model.NodesOut = pyo.Set(model.Nodes, initialize=NodesOut_init)

def NodesIn_init(m, node):

for i, j in m.Arcs:

if j == node:

yield i

model.NodesIn = pyo.Set(model.Nodes, initialize=NodesIn_init)

model.Flow = pyo.Var(model.Arcs, domain=pyo.NonNegativeReals)

model.FlowCost = pyo.Param(model.Arcs)

model.Demand = pyo.Param(model.Nodes)

model.Supply = pyo.Param(model.Nodes)

def Obj_rule(m):

return pyo.summation(m.FlowCost, m.Flow)

model.Obj = pyo.Objective(rule=Obj_rule, sense=pyo.minimize)

def FlowBalance_rule(m, node):

return m.Supply[node] \

+ sum(m.Flow[i, node] for i in m.NodesIn[node]) \

- m.Demand[node] \

- sum(m.Flow[node, j] for j in m.NodesOut[node]) \

== 0

model.FlowBalance = pyo.Constraint(model.Nodes, rule=FlowBalance_rule)

for this model, a toy data file (in AMPL “.dat” format) would be:

set Nodes := CityA CityB CityC ;

set Arcs :=

CityA CityB

CityA CityC

CityC CityB

;

param : FlowCost :=

CityA CityB 1.4

CityA CityC 2.7

CityC CityB 1.6

;

param Demand :=

CityA 0

CityB 1

CityC 1

;

param Supply :=

CityA 2

CityB 0

CityC 0

;

This can also be done somewhat more efficiently, and perhaps more clearly,

using a BuildAction (for more information, see BuildAction and BuildCheck):

model.NodesOut = pyo.Set(model.Nodes, within=model.Nodes)

model.NodesIn = pyo.Set(model.Nodes, within=model.Nodes)

def Populate_In_and_Out(model):

# loop over the arcs and record the end points

for i, j in model.Arcs:

model.NodesIn[j].add(i)

model.NodesOut[i].add(j)

model.In_n_Out = pyo.BuildAction(rule=Populate_In_and_Out)

Sparse Index Sets Example

One may want to have a constraint that holds

There are many ways to accomplish this, but one good way is to create a

set of tuples composed of all model.k, model.V[k] pairs. This

can be done as follows:

def kv_init(m):

return ((k,v) for k in m.K for v in m.V[k])

model.KV = pyo.Set(dimen=2, initialize=kv_init)

We can now create the constraint \(x_{i,k,v} \leq a_{i,k}y_i \;\forall\; i \in I, k \in K, v \in V_k\) with:

model.a = pyo.Param(model.I, model.K, default=1)

model.y = pyo.Var(model.I)

model.x = pyo.Var(model.I, model.KV)

def c1_rule(m, i, k, v):

return m.x[i,k,v] <= m.a[i,k]*m.y[i]

model.c1 = pyo.Constraint(model.I, model.KV, rule=c1_rule)

Parameters

The word “parameters” is used in many settings. When discussing a Pyomo

model, we use the word to refer to data that must be provided in order

to find an optimal (or good) assignment of values to the decision

variables. Parameters are declared as instances of a Param

class, which

takes arguments that are somewhat similar to the Set class. For

example, the following code snippet declares sets model.A and

model.B, and then a parameter model.P that is indexed by

model.A and model.B:

model.A = pyo.RangeSet(1,3)

model.B = pyo.Set()

model.P = pyo.Param(model.A, model.B)

In addition to sets that serve as indexes, Param takes

the following options:

default= The parameter value absent any other specification.doc= A string describing the parameter.initialize= A function (or Python object) that returns data used to initialize the parameter values.mutable= Boolean value indicating if the Param values are allowed to change after the Param is initialized.validate= A callback function that takes the model, proposed value, and indices of the proposed value; returningTrueif the value is valid. ReturningFalsewill generate an exception.within= Set used for validation; it specifies the domain of valid parameter values.

These options perform in the same way as they do for Set. For

example, given model.A with values {1, 2, 3}, then there are many

ways to create a parameter that represents a square matrix with 9, 16, 25 on the

main diagonal and zeros elsewhere, here are two ways to do it. First using a

Python object to initialize:

v={}

v[1,1] = 9

v[2,2] = 16

v[3,3] = 25

model.S1 = pyo.Param(model.A, model.A, initialize=v, default=0)

And now using an initialization function that is automatically called

once for each index tuple (remember that we are assuming that

model.A contains {1, 2, 3})

def s_init(model, i, j):

if i == j:

return i*i

else:

return 0.0

model.S2 = pyo.Param(model.A, model.A, initialize=s_init)

In this example, the index set contained integers, but index sets need not be numeric. It is very common to use strings.

Note

Data specified in an input file will override the data specified by

the initialize option.

Parameter values can be checked by a validation function. In the

following example, the every value of the parameter T (indexed by

model.A) is checked

to be greater than 3.14159. If a value is provided that is less than

that, the model instantiation will be terminated and an error message

issued. The validation function should be written so as to return

True if the data is valid and False otherwise.

t_data = {1: 10, 2: 3, 3: 20}

def t_validate(model, v, i):

return v > 3.14159

model.T = pyo.Param(model.A, validate=t_validate, initialize=t_data)

This example will prodice the following error, indicating that the value

provided for T[2] failed validation:

Traceback (most recent call last):

...

ValueError: Invalid parameter value: T[2] = '3', value type=<class 'int'>.

Value failed parameter validation rule

Variables

Variables are intended to ultimately be given values by an optimization

package. They are declared and optionally bounded, given initial values,

and documented using the Pyomo Var function. If index sets are given

as arguments to this function they are used to index the variable. Other

optional directives include:

bounds = A function (or Python object) that gives a (lower,upper) bound pair for the variable

domain = A set that is a super-set of the values the variable can take on.

initialize = A function (or Python object) that gives a starting value for the variable; this is particularly important for non-linear models

within = (synonym for

domain)

The following code snippet illustrates some aspects of these options by

declaring a singleton (i.e. unindexed) variable named

model.LumberJack that will take on real values between zero and 6

and it initialized to be 1.5:

model.LumberJack = Var(within=NonNegativeReals, bounds=(0, 6), initialize=1.5)

Instead of the initialize option, initialization is sometimes done

with a Python assignment statement as in

model.LumberJack = 1.5

For indexed variables, bounds and initial values are often specified by a rule (a Python function) that itself may make reference to parameters or other data. The formal arguments to these rules begins with the model followed by the indexes. This is illustrated in the following code snippet that makes use of Python dictionaries declared as lb and ub that are used by a function to provide bounds:

model.A = Set(initialize=['Scones', 'Tea'])

lb = {'Scones': 2, 'Tea': 4}

ub = {'Scones': 5, 'Tea': 7}

def fb(model, i):

return (lb[i], ub[i])

model.PriceToCharge = Var(model.A, domain=PositiveIntegers, bounds=fb)

Note

Many of the pre-defined virtual sets that are used as domains imply

bounds. A strong example is the set Boolean that implies bounds

of zero and one.

Objectives

An objective is a function of variables that returns a value that an

optimization package attempts to maximize or minimize. The Objective

function in Pyomo declares an objective. Although other mechanisms are

possible, this function is typically passed the name of another function

that gives the expression. Here is a very simple version of such a

function that assumes model.x has previously been declared as a

Var:

>>> def ObjRule(model):

... return 2*model.x[1] + 3*model.x[2]

>>> model.obj1 = pyo.Objective(rule=ObjRule)

It is more common for an objective function to refer to parameters as in

this example that assumes that model.p has been declared as a

Param and that model.x has been declared with the same index

set, while model.y has been declared as a singleton:

>>> def ObjRule(model):

... return pyo.summation(model.p, model.x) + model.y

>>> model.obj2 = pyo.Objective(rule=ObjRule, sense=pyo.maximize)

This example uses the sense option to specify maximization. The

default sense is minimize.

Constraints

Most constraints are specified using equality or inequality expressions

that are created using a rule, which is a Python function. For example,

if the variable model.x has the indexes ‘butter’ and ‘scones’, then

this constraint limits the sum over these indexes to be exactly three:

def teaOKrule(model):

return model.x['butter'] + model.x['scones'] == 3

model.TeaConst = Constraint(rule=teaOKrule)

Instead of expressions involving equality (==) or inequalities (<= or

>=), constraints can also be expressed using a 3-tuple if the form

(lb, expr, ub) where lb and ub can be None, which is interpreted as

lb <= expr <= ub. Variables can appear only in the middle expr. For

example, the following two constraint declarations have the same

meaning:

model.x = Var()

def aRule(model):

return model.x >= 2

model.Boundx = Constraint(rule=aRule)

def bRule(model):

return (2, model.x, None)

model.boundx = Constraint(rule=bRule)

For this simple example, it would also be possible to declare

model.x with a bounds option to accomplish the same thing.

Constraints (and objectives) can be indexed by lists or sets. When the

declaration contains lists or sets as arguments, the elements are

iteratively passed to the rule function. If there is more than one, then

the cross product is sent. For example the following constraint could be

interpreted as placing a budget of \(i\) on the

\(i^{\mbox{th}}\) item to buy where the cost per item is given by

the parameter model.a:

model.A = RangeSet(1, 10)

model.a = Param(model.A, within=PositiveReals)

model.ToBuy = Var(model.A)

def bud_rule(model, i):

return model.a[i] * model.ToBuy[i] <= i

aBudget = Constraint(model.A, rule=bud_rule)

Note

Python and Pyomo are case sensitive so model.a is not the same as

model.A.

Expressions

In this section, we use the word “expression” in two ways: first in the

general sense of the word and second to describe a class of Pyomo objects

that have the name Expression as described in the subsection on

expression objects.

Rules to Generate Expressions

Both objectives and constraints make use of rules to generate expressions. These are Python functions that return the appropriate expression. These are first-class functions that can access global data as well as data passed in, including the model object.

Operations on model elements results in expressions, which seems natural

in expressions like the constraints we have seen so far. It is also

possible to build up expressions. The following example illustrates

this, along with a reference to global Python data in the form of a

Python variable called switch:

switch = 3

model.A = RangeSet(1, 10)

model.c = Param(model.A)

model.d = Param()

model.x = Var(model.A, domain=Boolean)

def pi_rule(model):

accexpr = summation(model.c, model.x)

if switch >= 2:

accexpr = accexpr - model.d

return accexpr >= 0.5

PieSlice = Constraint(rule=pi_rule)

In this example, the constraint that is generated depends on the value

of the Python variable called switch. If the value is 2 or greater,

then the constraint is summation(model.c, model.x) - model.d >= 0.5;

otherwise, the model.d term is not present.

Warning

Because model elements result in expressions, not values, the following does not work as expected in an abstract model!

model.A = RangeSet(1, 10)

model.c = Param(model.A)

model.d = Param()

model.x = Var(model.A, domain=Boolean)

def pi_rule(model):

accexpr = summation(model.c, model.x)

if model.d >= 2: # NOT in an abstract model!!

accexpr = accexpr - model.d

return accexpr >= 0.5

PieSlice = Constraint(rule=pi_rule)

The trouble is that model.d >= 2 results in an expression, not

its evaluated value. Instead use if value(model.d) >= 2

Note

Pyomo supports non-linear expressions and can call non-linear solvers such as Ipopt.

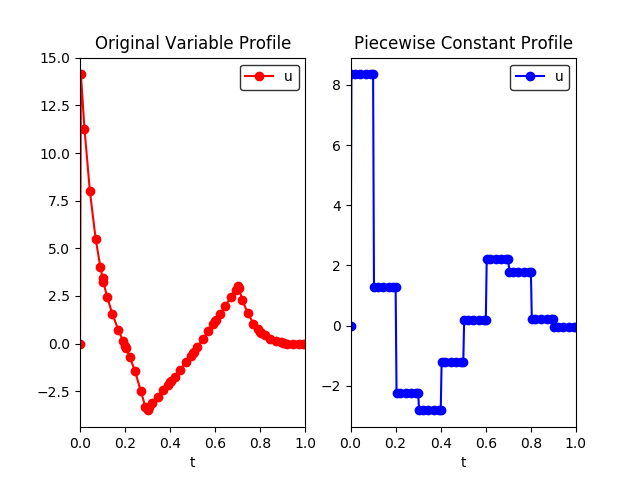

Piecewise Linear Expressions

Pyomo has facilities to add piecewise constraints of the form y=f(x) for a variety of forms of the function f.

The piecewise types other than SOS2, BIGM_SOS1, BIGM_BIN are implement as described in the paper [Vielma_et_al].

There are two basic forms for the declaration of the constraint:

# model.pwconst = Piecewise(indexes, yvar, xvar, **Keywords)

# model.pwconst = Piecewise(yvar,xvar,**Keywords)

where pwconst can be replaced by a name appropriate for the

application. The choice depends on whether the x and y variables are

indexed. If so, they must have the same index sets and these sets are

give as the first arguments.

Keywords:

pw_pts={ },[ ],( )

A dictionary of lists (where keys are the index set) or a single list (for the non-indexed case or when an identical set of breakpoints is used across all indices) defining the set of domain breakpoints for the piecewise linear function.

Note

pw_pts is always required. These give the breakpoints for the piecewise function and are expected to fully span the bounds for the independent variable(s).

pw_repn=<Option>

Indicates the type of piecewise representation to use. This can have a major impact on solver performance. Options: (Default “SOS2”)

“SOS2” - Standard representation using sos2 constraints.

“BIGM_BIN” - BigM constraints with binary variables. The theoretically tightest M values are automatically determined.

“BIGM_SOS1” - BigM constraints with sos1 variables. The theoretically tightest M values are automatically determined.

“DCC” - Disaggregated convex combination model.

“DLOG” - Logarithmic disaggregated convex combination model.

“CC” - Convex combination model.

“LOG” - Logarithmic branching convex combination.

“MC” - Multiple choice model.

“INC” - Incremental (delta) method.

Note

Step functions are supported for all but the two BIGM options. Refer to the ‘force_pw’ option.

pw_constr_type= <Option>

Indicates the bound type of the piecewise function. Options:

“UB” - y variable is bounded above by piecewise function.

“LB” - y variable is bounded below by piecewise function.

“EQ” - y variable is equal to the piecewise function.

f_rule=f(model,i,j,…,x), { }, [ ], ( )

An object that returns a numeric value that is the range value corresponding to each piecewise domain point. For functions, the first argument must be a Pyomo model. The last argument is the domain value at which the function evaluates (Not a Pyomo

Var). Intermediate arguments are the corresponding indices of the Piecewise component (if any). Otherwise, the object can be a dictionary of lists/tuples (with keys the same as the indexing set) or a singe list/tuple (when no indexing set is used or when all indices use an identical piecewise function). Examples:# A function that changes with index def f(model, j, x): if j == 2: return x**2 + 1.0 else: return x**2 + 5.0 # A nonlinear function f = lambda model, x: exp(x) + value(model.p) # A step function f = [0, 0, 1, 1, 2, 2]

force_pw=True/False

Using the given function rule and pw_pts, a check for convexity/concavity is implemented. If (1) the function is convex and the piecewise constraints are lower bounds or if (2) the function is concave and the piecewise constraints are upper bounds then the piecewise constraints will be substituted for linear constraints. Setting ‘force_pw=True’ will force the use of the original piecewise constraints even when one of these two cases applies.

warning_tol=<float>

To aid in debugging, a warning is printed when consecutive slopes of piecewise segments are within <warning_tol> of each other. Default=1e-8

warn_domain_coverage=True/False

Print a warning when the feasible region of the domain variable is not completely covered by the piecewise breakpoints. Default=True

unbounded_domain_var=True/False

Allow an unbounded or partially bounded Pyomo Var to be used as the domain variable. Default=False

Note

This does not imply unbounded piecewise segments will be constructed. The outermost piecewise breakpoints will bound the domain variable at each index. However, the Var attributes .lb and .ub will not be modified.

Here is an example of an assignment to a Python dictionary variable that has keywords for a picewise constraint:

kwds = {'pw_constr_type': 'EQ', 'pw_repn': 'SOS2', 'sense': maximize, 'force_pw': True}

Here is a simple example based on the example given earlier in

Symbolic Index Sets. In this new example, the objective function is the

sum of c times x to the fourth. In this example, the keywords are passed

directly to the Piecewise function without being assigned to a

dictionary variable. The upper bound on the x variables was chosen

whimsically just to make the example. The important thing to note is

that variables that are going to appear as the independent variable in a

piecewise constraint must have bounds.

# ___________________________________________________________________________

#

# Pyomo: Python Optimization Modeling Objects

# Copyright (c) 2008-2024

# National Technology and Engineering Solutions of Sandia, LLC

# Under the terms of Contract DE-NA0003525 with National Technology and

# Engineering Solutions of Sandia, LLC, the U.S. Government retains certain

# rights in this software.

# This software is distributed under the 3-clause BSD License.

# ___________________________________________________________________________

# abstract2piece.py

# Similar to abstract2.py, but the objective is now c times x to the fourth power

from pyomo.environ import *

model = AbstractModel()

model.I = Set()

model.J = Set()

Topx = 6.1 # range of x variables

model.a = Param(model.I, model.J)

model.b = Param(model.I)

model.c = Param(model.J)

# the next line declares a variable indexed by the set J

model.x = Var(model.J, domain=NonNegativeReals, bounds=(0, Topx))

model.y = Var(model.J, domain=NonNegativeReals)

# to avoid warnings, we set breakpoints at or beyond the bounds

PieceCnt = 100

bpts = []

for i in range(PieceCnt + 2):

bpts.append(float((i * Topx) / PieceCnt))

def f4(model, j, xp):

# we not need j, but it is passed as the index for the constraint

return xp**4

model.ComputeObj = Piecewise(

model.J, model.y, model.x, pw_pts=bpts, pw_constr_type='EQ', f_rule=f4

)

def obj_expression(model):

return summation(model.c, model.y)

model.OBJ = Objective(rule=obj_expression)

def ax_constraint_rule(model, i):

# return the expression for the constraint for i

return sum(model.a[i, j] * model.x[j] for j in model.J) >= model.b[i]

# the next line creates one constraint for each member of the set model.I

model.AxbConstraint = Constraint(model.I, rule=ax_constraint_rule)

A more advanced example is provided in abstract2piecebuild.py in BuildAction and BuildCheck.

Expression Objects

Pyomo Expression objects are very similar to the Param component

(with mutable=True) except that the underlying values can be numeric

constants or Pyomo expressions. Here’s an illustration of expression

objects in an AbstractModel. An expression object with an index set

that is the numbers 1, 2, 3 is created and initialized to be the model

variable x times the index. Later in the model file, just to illustrate

how to do it, the expression is changed but just for the first index to

be x squared.

model = ConcreteModel()

model.x = Var(initialize=1.0)

def _e(m, i):

return m.x * i

model.e = Expression([1, 2, 3], rule=_e)

instance = model.create_instance()

print(value(instance.e[1])) # -> 1.0

print(instance.e[1]()) # -> 1.0

print(instance.e[1].value) # -> a pyomo expression object

# Change the underlying expression

instance.e[1].value = instance.x**2

# ... solve

# ... load results

# print the value of the expression given the loaded optimal solution

print(value(instance.e[1]))

An alternative is to create Python functions that, potentially, manipulate model objects. E.g., if you define a function

def f(x, p):

return x + p

You can call this function with or without Pyomo modeling components as the arguments. E.g., f(2,3) will return a number, whereas f(model.x, 3) will return a Pyomo expression due to operator overloading.

If you take this approach you should note that anywhere a Pyomo expression is used to generate another expression (e.g., f(model.x, 3) + 5), the initial expression is always cloned so that the new generated expression is independent of the old. For example:

model = ConcreteModel()

model.x = Var()

# create a Pyomo expression

e1 = model.x + 5

# create another Pyomo expression

# e1 is copied when generating e2

e2 = e1 + model.x

If you want to create an expression that is shared between other

expressions, you can use the Expression component.

Suffixes

Suffixes provide a mechanism for declaring extraneous model data, which can be used in a number of contexts. Most commonly, suffixes are used by solver plugins to store extra information about the solution of a model. This and other suffix functionality is made available to the modeler through the use of the Suffix component class. Uses of Suffix include:

Importing extra information from a solver about the solution of a mathematical program (e.g., constraint duals, variable reduced costs, basis information).

Exporting information to a solver or algorithm to aid in solving a mathematical program (e.g., warm-starting information, variable branching priorities).

Tagging modeling components with local data for later use in advanced scripting algorithms.

Suffix Notation and the Pyomo NL File Interface

The Suffix component used in Pyomo has been adapted from the suffix notation used in the modeling language AMPL [AMPL]. Therefore, it follows naturally that AMPL style suffix functionality is fully available using Pyomo’s NL file interface. For information on AMPL style suffixes the reader is referred to the AMPL website:

A number of scripting examples that highlight the use AMPL style suffix

functionality are available in the examples/pyomo/suffixes directory

distributed with Pyomo.

Declaration

The effects of declaring a Suffix component on a Pyomo model are determined by the following traits:

direction: This trait defines the direction of information flow for the suffix. A suffix direction can be assigned one of four possible values:

LOCAL- suffix data stays local to the modeling framework and will not be imported or exported by a solver plugin (default)IMPORT- suffix data will be imported from the solver by its respective solver pluginEXPORT- suffix data will be exported to a solver by its respective solver pluginIMPORT_EXPORT- suffix data flows in both directions between the model and the solver or algorithm

datatype: This trait advertises the type of data held on the suffix for those interfaces where it matters (e.g., the NL file interface). A suffix datatype can be assigned one of three possible values:

FLOAT- the suffix stores floating point data (default)INT- the suffix stores integer dataNone- the suffix stores any type of data

Note

Exporting suffix data through Pyomo’s NL file interface requires all

active export suffixes have a strict datatype (i.e.,

datatype=None is not allowed).

The following code snippet shows examples of declaring a Suffix component on a Pyomo model:

import pyomo.environ as pyo

model = pyo.ConcreteModel()

# Export integer data

model.priority = pyo.Suffix(

direction=pyo.Suffix.EXPORT, datatype=pyo.Suffix.INT)

# Export and import floating point data

model.dual = pyo.Suffix(direction=pyo.Suffix.IMPORT_EXPORT)

# Store floating point data

model.junk = pyo.Suffix()

Declaring a Suffix with a non-local direction on a model is not guaranteed to be compatible with all solver plugins in Pyomo. Whether a given Suffix is acceptable or not depends on both the solver and solver interface being used. In some cases, a solver plugin will raise an exception if it encounters a Suffix type that it does not handle, but this is not true in every situation. For instance, the NL file interface is generic to all AMPL-compatible solvers, so there is no way to validate that a Suffix of a given name, direction, and datatype is appropriate for a solver. One should be careful in verifying that Suffix declarations are being handled as expected when switching to a different solver or solver interface.

Operations

The Suffix component class provides a dictionary interface for mapping Pyomo modeling components to arbitrary data. This mapping functionality is captured within the ComponentMap base class, which is also available within Pyomo’s modeling environment. The ComponentMap can be used as a more lightweight replacement for Suffix in cases where a simple mapping from Pyomo modeling components to arbitrary data values is required.

Note

ComponentMap and Suffix use the built-in id() function for

hashing entry keys. This design decision arises from the fact that

most of the modeling components found in Pyomo are either not

hashable or use a hash based on a mutable numeric value, making them

unacceptable for use as keys with the built-in dict class.

Warning

The use of the built-in id() function for hashing entry keys in

ComponentMap and Suffix makes them inappropriate for use in

situations where built-in object types must be used as keys. It is

strongly recommended that only Pyomo modeling components be used as

keys in these mapping containers (Var, Constraint, etc.).

Warning

Do not attempt to pickle or deepcopy instances of ComponentMap or Suffix unless doing so along with the components for which they hold mapping entries. As an example, placing one of these objects on a model and then cloning or pickling that model is an acceptable scenario.

In addition to the dictionary interface provided through the ComponentMap base class, the Suffix component class also provides a number of methods whose default semantics are more convenient for working with indexed modeling components. The easiest way to highlight this functionality is through the use of an example.

model = pyo.ConcreteModel()

model.x = pyo.Var()

model.y = pyo.Var([1,2,3])

model.foo = pyo.Suffix()

In this example we have a concrete Pyomo model with two different types of variable components (indexed and non-indexed) as well as a Suffix declaration (foo). The next code snippet shows examples of adding entries to the suffix foo.

# Assign a suffix value of 1.0 to model.x

model.foo.set_value(model.x, 1.0)

# Same as above with dict interface

model.foo[model.x] = 1.0

# Assign a suffix value of 0.0 to all indices of model.y

# By default this expands so that entries are created for

# every index (y[1], y[2], y[3]) and not model.y itself

model.foo.set_value(model.y, 0.0)

# The same operation using the dict interface results in an entry only

# for the parent component model.y

model.foo[model.y] = 50.0

# Assign a suffix value of -1.0 to model.y[1]

model.foo.set_value(model.y[1], -1.0)

# Same as above with the dict interface

model.foo[model.y[1]] = -1.0

In this example we highlight the fact that the __setitem__ and

setValue entry methods can be used interchangeably except in the

case where indexed components are used (model.y). In the indexed case,

the __setitem__ approach creates a single entry for the parent

indexed component itself, whereas the setValue approach by default

creates an entry for each index of the component. This behavior can be

controlled using the optional keyword ‘expand’, where assigning it a

value of False results in the same behavior as __setitem__.

Other operations like accessing or removing entries in our mapping can

performed as if the built-in dict class is in use.

>>> print(model.foo.get(model.x))

1.0

>>> print(model.foo[model.x])

1.0

>>> print(model.foo.get(model.y[1]))

-1.0

>>> print(model.foo[model.y[1]])

-1.0

>>> print(model.foo.get(model.y[2]))

0.0

>>> print(model.foo[model.y[2]])

0.0

>>> print(model.foo.get(model.y))

50.0

>>> print(model.foo[model.y])

50.0

>>> del model.foo[model.y]

>>> print(model.foo.get(model.y))

None

>>> print(model.foo[model.y])

Traceback (most recent call last):

...

KeyError: "Component with id '...': y"

The non-dict method clear_value can be used in place of

__delitem__ to remove entries, where it inherits the same default

behavior as setValue for indexed components and does not raise a

KeyError when the argument does not exist as a key in the mapping.

>>> model.foo.clear_value(model.y)

>>> print(model.foo[model.y[1]])

Traceback (most recent call last):

...

KeyError: "Component with id '...': y[1]"

>>> del model.foo[model.y[1]]

Traceback (most recent call last):

...

KeyError: "Component with id '...': y[1]"

>>> model.foo.clear_value(model.y[1])

A summary non-dict Suffix methods is provided here:

clearAllValues()Clears all suffix data.clear_value(component, expand=True)Clears suffix information for a component.setAllValues(value)Sets the value of this suffix on all components.setValue(component, value, expand=True)Sets the value of this suffix on the specified component.updateValues(data_buffer, expand=True)Updates the suffix data given a list of component,value tuples. Providesan improvement in efficiency over calling setValue on every component.getDatatype()Return the suffix datatype.setDatatype(datatype)Set the suffix datatype.getDirection()Return the suffix direction.setDirection(direction)Set the suffix direction.importEnabled()Returns True when this suffix is enabled for import from solutions.exportEnabled()Returns True when this suffix is enabled for export to solvers.

Importing Suffix Data

Importing suffix information from a solver solution is achieved by declaring a Suffix component with the appropriate name and direction. Suffix names available for import may be specific to third-party solvers as well as individual solver interfaces within Pyomo. The most common of these, available with most solvers and solver interfaces, is constraint dual multipliers. Requesting that duals be imported into suffix data can be accomplished by declaring a Suffix component on the model.

model = pyo.ConcreteModel()

model.dual = pyo.Suffix(direction=pyo.Suffix.IMPORT)

model.x = pyo.Var()

model.obj = pyo.Objective(expr=model.x)

model.con = pyo.Constraint(expr=model.x >= 1.0)

The existence of an active suffix with the name dual that has an import

style suffix direction will cause constraint dual information to be

collected into the solver results (assuming the solver supplies dual

information). In addition to this, after loading solver results into a

problem instance (using a python script or Pyomo callback functions in

conjunction with the pyomo command), one can access the dual values

associated with constraints using the dual Suffix component.

>>> results = pyo.SolverFactory('glpk').solve(model)

>>> pyo.assert_optimal_termination(results)

>>> print(model.dual[model.con])

1.0

Alternatively, the pyomo option --solver-suffixes can be used to

request suffix information from a solver. In the event that suffix names

are provided via this command-line option, the pyomo script will

automatically declare these Suffix components on the constructed

instance making these suffixes available for import.

Exporting Suffix Data

Exporting suffix data is accomplished in a similar manner as to that of importing suffix data. One simply needs to declare a Suffix component on the model with an export style suffix direction and associate modeling component values with it. The following example shows how one can declare a special ordered set of type 1 using AMPL-style suffix notation in conjunction with Pyomo’s NL file interface.

model = pyo.ConcreteModel()

model.y = pyo.Var([1,2,3], within=pyo.NonNegativeReals)

model.sosno = pyo.Suffix(direction=pyo.Suffix.EXPORT)

model.ref = pyo.Suffix(direction=pyo.Suffix.EXPORT)

# Add entry for each index of model.y

model.sosno.set_value(model.y, 1)

model.ref[model.y[1]] = 0

model.ref[model.y[2]] = 1

model.ref[model.y[3]] = 2

Most AMPL-compatible solvers will recognize the suffix names sosno

and ref as declaring a special ordered set, where a positive value

for sosno indicates a special ordered set of type 1 and a negative

value indicates a special ordered set of type 2.

Note

Pyomo provides the SOSConstraint component for declaring special

ordered sets, which is recognized by all solver interfaces, including

the NL file interface.

Pyomo’s NL file interface will recognize an EXPORT style Suffix component with the name ‘dual’ as supplying initializations for constraint multipliers. As such it will be treated separately than all other EXPORT style suffixes encountered in the NL writer, which are treated as AMPL-style suffixes. The following example script shows how one can warmstart the interior-point solver Ipopt by supplying both primal (variable values) and dual (suffixes) solution information. This dual suffix information can be both imported and exported using a single Suffix component with an IMPORT_EXPORT direction.

model = pyo.ConcreteModel()

model.x1 = pyo.Var(bounds=(1,5),initialize=1.0)

model.x2 = pyo.Var(bounds=(1,5),initialize=5.0)

model.x3 = pyo.Var(bounds=(1,5),initialize=5.0)

model.x4 = pyo.Var(bounds=(1,5),initialize=1.0)

model.obj = pyo.Objective(

expr=model.x1*model.x4*(model.x1 + model.x2 + model.x3) + model.x3)

model.inequality = pyo.Constraint(

expr=model.x1*model.x2*model.x3*model.x4 >= 25.0)

model.equality = pyo.Constraint(

expr=model.x1**2 + model.x2**2 + model.x3**2 + model.x4**2 == 40.0)

### Declare all suffixes

# Ipopt bound multipliers (obtained from solution)

model.ipopt_zL_out = pyo.Suffix(direction=pyo.Suffix.IMPORT)

model.ipopt_zU_out = pyo.Suffix(direction=pyo.Suffix.IMPORT)

# Ipopt bound multipliers (sent to solver)

model.ipopt_zL_in = pyo.Suffix(direction=pyo.Suffix.EXPORT)

model.ipopt_zU_in = pyo.Suffix(direction=pyo.Suffix.EXPORT)

# Obtain dual solutions from first solve and send to warm start

model.dual = pyo.Suffix(direction=pyo.Suffix.IMPORT_EXPORT)

ipopt = pyo.SolverFactory('ipopt')

The difference in performance can be seen by examining Ipopt’s iteration log with and without warm starting:

Without Warmstart:

ipopt.solve(model, tee=True)

... iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls 0 1.6109693e+01 1.12e+01 5.28e-01 -1.0 0.00e+00 - 0.00e+00 0.00e+00 0 1 1.6982239e+01 7.30e-01 1.02e+01 -1.0 6.11e-01 - 7.19e-02 1.00e+00f 1 2 1.7318411e+01 ... ... 8 1.7014017e+01 ... Number of Iterations....: 8 ...

With Warmstart:

### Set Ipopt options for warm-start # The current values on the ipopt_zU_out and ipopt_zL_out suffixes will # be used as initial conditions for the bound multipliers to solve the # new problem model.ipopt_zL_in.update(model.ipopt_zL_out) model.ipopt_zU_in.update(model.ipopt_zU_out) ipopt.options['warm_start_init_point'] = 'yes' ipopt.options['warm_start_bound_push'] = 1e-6 ipopt.options['warm_start_mult_bound_push'] = 1e-6 ipopt.options['mu_init'] = 1e-6 ipopt.solve(model, tee=True)

... iter objective inf_pr inf_du lg(mu) ||d|| lg(rg) alpha_du alpha_pr ls 0 1.7014032e+01 2.00e-06 4.07e-06 -6.0 0.00e+00 - 0.00e+00 0.00e+00 0 1 1.7014019e+01 3.65e-12 1.00e-11 -6.0 2.50e-01 - 1.00e+00 1.00e+00h 1 2 1.7014017e+01 ... Number of Iterations....: 2 ...

Using Suffixes With an AbstractModel

In order to allow the declaration of suffix data within the framework of

an AbstractModel, the Suffix component can be initialized with an

optional construction rule. As with constraint rules, this function will

be executed at the time of model construction. The following simple

example highlights the use of the rule keyword in suffix

initialization. Suffix rules are expected to return an iterable of

(component, value) tuples, where the expand=True semantics are

applied for indexed components.

model = pyo.AbstractModel()

model.x = pyo.Var()

model.c = pyo.Constraint(expr=model.x >= 1)

def foo_rule(m):

return ((m.x, 2.0), (m.c, 3.0))

model.foo = pyo.Suffix(rule=foo_rule)

>>> # Instantiate the model

>>> inst = model.create_instance()

>>> print(inst.foo[inst.x])

2.0

>>> print(inst.foo[inst.c])

3.0

>>> # Note that model.x and inst.x are not the same object

>>> print(inst.foo[model.x])

Traceback (most recent call last):

...

KeyError: "Component with id '...': x"

The next example shows an abstract model where suffixes are attached only to the variables:

model = pyo.AbstractModel()

model.I = pyo.RangeSet(1,4)

model.x = pyo.Var(model.I)

def c_rule(m, i):

return m.x[i] >= i

model.c = pyo.Constraint(model.I, rule=c_rule)

def foo_rule(m):

return ((m.x[i], 3.0*i) for i in m.I)

model.foo = pyo.Suffix(rule=foo_rule)

>>> # instantiate the model

>>> inst = model.create_instance()

>>> for i in inst.I:

... print((i, inst.foo[inst.x[i]]))

(1, 3.0)

(2, 6.0)

(3, 9.0)

(4, 12.0)

Solving Pyomo Models

Solving ConcreteModels

If you have a ConcreteModel, add these lines at the bottom of your Python script to solve it

>>> opt = pyo.SolverFactory('glpk')

>>> opt.solve(model)

Solving AbstractModels

If you have an AbstractModel, you must create a concrete instance of your model before solving it using the same lines as above:

>>> instance = model.create_instance()

>>> opt = pyo.SolverFactory('glpk')

>>> opt.solve(instance)

pyomo solve Command

To solve a ConcreteModel contained in the file my_model.py using the

pyomo command and the solver GLPK, use the following line in a

terminal window:

pyomo solve my_model.py --solver='glpk'

To solve an AbstractModel contained in the file my_model.py with data

in the file my_data.dat using the pyomo command and the solver GLPK,

use the following line in a terminal window:

pyomo solve my_model.py my_data.dat --solver='glpk'

Supported Solvers

Pyomo supports a wide variety of solvers. Pyomo has specialized

interfaces to some solvers (for example, BARON, CBC, CPLEX, and Gurobi).

It also has generic interfaces that support calling any solver that can

read AMPL “.nl” and write “.sol” files and the ability to

generate GAMS-format models and retrieve the results. You can get the

current list of supported solvers using the pyomo command: